Adding JOIN support for parallel replicas on ClickHouse®️

We recently introduced a pull request to ClickHouse that enables simple JOIN support for parallel replicas on ClickHouse. The solution may be simple and naive, but the ceiling for performance on distributed queries just got WAY higher.

How do you handle complex queries over truly massive datasets?

This is the question that paradigms like massively parallel processing (MPP) and distributed computing seek to answer. The idea is simple: spread the work of a complex task across multiple distributed machines so they can work in parallel to more rapidly return a response.

Data warehouses have made this a mainstream concept, leveraging distributed computing to handle large analytical queries at scale.

At Tinybird, we’re also focused on improving performance at scale with ClickHouse, the columnar database that underpins our real-time data platform.

ClickHouse is very fast out of the box, and you’d be surprised how quickly it can run complex queries over many rows of data on a single machine, returning results in a fraction of a second for Tinybird's data APIs.

But as you’ll see below, our customers are bringing us some exceptionally complex use cases, such as large JOINs over tables containing over 50 billion records. So, we’re asking the question again:

How do you handle complex queries over truly massive datasets?

In this blog post, I’ll explain how we’re exploring the use of parallel replicas in ClickHouse to horizontally scale query throughput, and what we did to solve a very critical shortcoming in ClickHouse’s parallel replicas implementation.

Why do we care about parallel replicas?

Read this part if you're new to ClickHouse or the way it handles distributed query execution. If you're familar with the concepts of sharding and parallel replicas, you can skip ahead.

ClickHouse is really fast right out of the box. You can run clickhouse-local on a single machine using the standard MergeTree engine and easily process simple aggregating and filtering queries over 100M rows in less than a second.

But as your data grows to billions of rows or your queries get increasingly complex, you eventually have to replicate data to different servers and parallelize your tasks. You can use engines like ReplicatedMergeTree to automatically replicate data across ClickHouse servers. While replication is useful for load balancing queries across many machines to improve query concurrency, in the end, you’re still running a query on a single machine. Until recently, if you wanted to process a single query in parallel across distributed machines on ClickHouse, you used sharding.

You can use the ReplicatedMergeTree engine to replicate data across ClickHouse servers, but if you wanted to distribute a query across multiple machines, you had to use sharding, which has some limitations.

What is sharding?

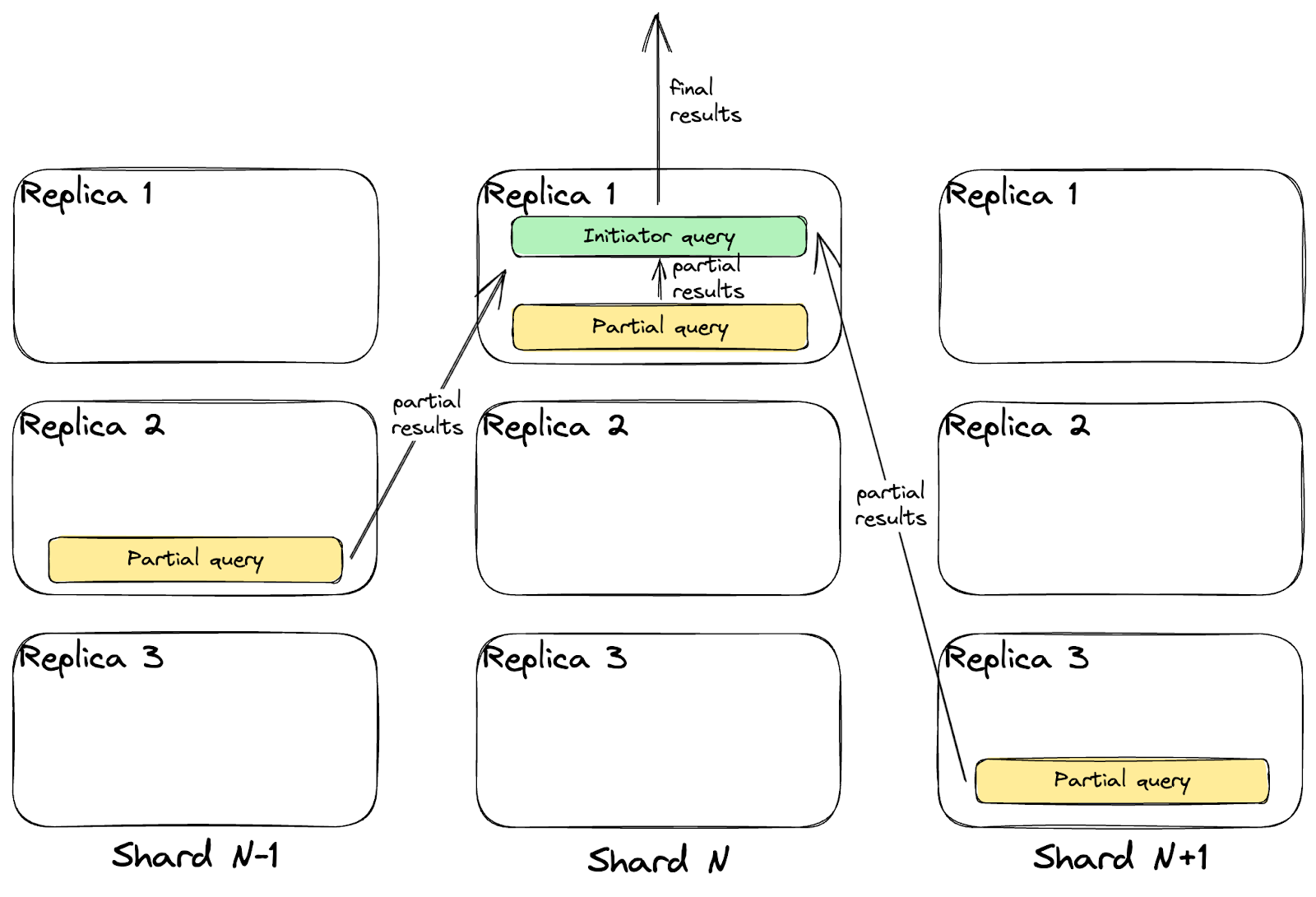

With sharding, you replicate parts of your table across multiple machines and process your queries in distributed parts. In this way, queries that needed to access more data or use more compute than you could handle on a single server could effectively run. This comment from the ClickHouse GitHub repo explains this concept quite well, and if you want more information on sharding in ClickHouse, you should read this.

But now, with its April 23.3 release, ClickHouse has introduced support for parallel replicas, which offer a new way to scale query performance with some unique benefits.

As of the 23.3 release, ClickHouse now offers parallel replicas, an alternative to sharding with some unique benefits.

Sharding vs Parallel Replicas

Parallel replicas and sharding are similar but different. With sharding, you’re splitting the contents of a single table across multiple servers, so you don’t have fully replicated copies of the data, only “shards” of a table distributed across these servers.

With parallel replicas, all the servers involved have a complete copy of the data. When you run a query, each server with a replica is given a piece of the query to work on, and their results are combined. Hence why it’s called “parallel replicas”; you’re parallelizing query execution across replicated tables. And with parallel replicas, you get the performance benefits of sharding with the fault tolerance of replication in a single package.

With parallel replicas, you get the performance benefits of sharding with the fault tolerance of replication in a single package.

ClickHouse also has zero-copy replication, which, at a high level, means that rather than replicating data between local disks, you can instead write it to external storage like S3, allowing many servers to access the same data. When combined with parallel replicas, zero-copy replication provides some obvious advantages; you can perform fewer write operations and store less data on disk while servers still have access to full replicas over which they can each run their piece of a distributed query.

Sounds like an amazing way to run some big queries over big data in ClickHouse, right? Not so fast.

The problem with parallel replicas in ClickHouse

While parallel replicas in ClickHouse offer storage-efficient distributed performance boosts, they come with a catch: They don’t support JOINs. If you try to execute a query with a JOIN on parallel replicas, it will fall back to executing the query on the primary server (the initiator). If that server can’t handle the query load, you get an error.

Parallel replicas in ClickHouse come with a catch: No JOIN support.

One of Tinybird’s unique value propositions to our users is that we enable JOINs on various data sources when developing real-time data products. This is useful for doing things like enriching event streams from Kafka with dimensions from Snowflake, and it’s a very powerful feature in the hands of data engineers who utilize Tinybird. In fact, nearly every single non-trivial use case that our customers tackle requires JOINs. This is what Tinybird customers want: to unify their data stack, write complex SQL queries, and publish them as APIs that respond in milliseconds.

Nearly every non-trivial use case our customers try to solve involves JOINs. We must have them if we're to use parallel replication.

If we are going to be able to adopt parallel replicas and the benefits they offer, we need support for JOINs.

Adding support for JOINs on parallel replicas

Because we love the idea of parallel replicas on ClickHouse and because we absolutely need to support JOINs to support our customers, we decided to add JOIN support for parallel replicas in ClickHouse with this pull request.

Our pull request to add simple JOIN support to parallel replicas in ClickHouse was recently merged, enabling powerful new use cases.

We identified a simple use case, described here, and some minimal expectations to meet:

- Running with or without parallel replicas should output the same results, given a large enough problem (dataset equal problem).

- Adding more replicas should make the queries faster (with caveats).

Then, just so that we could validate how much effort was required for the whole feature, we reduced the problem scope further:

- Focus on pure replicas (forgetting custom key for now).

- Only INNER JOIN (ignore the other dozen types of JOINs).

- Only the current ClickHouse interpreter (and not the Analyzer).

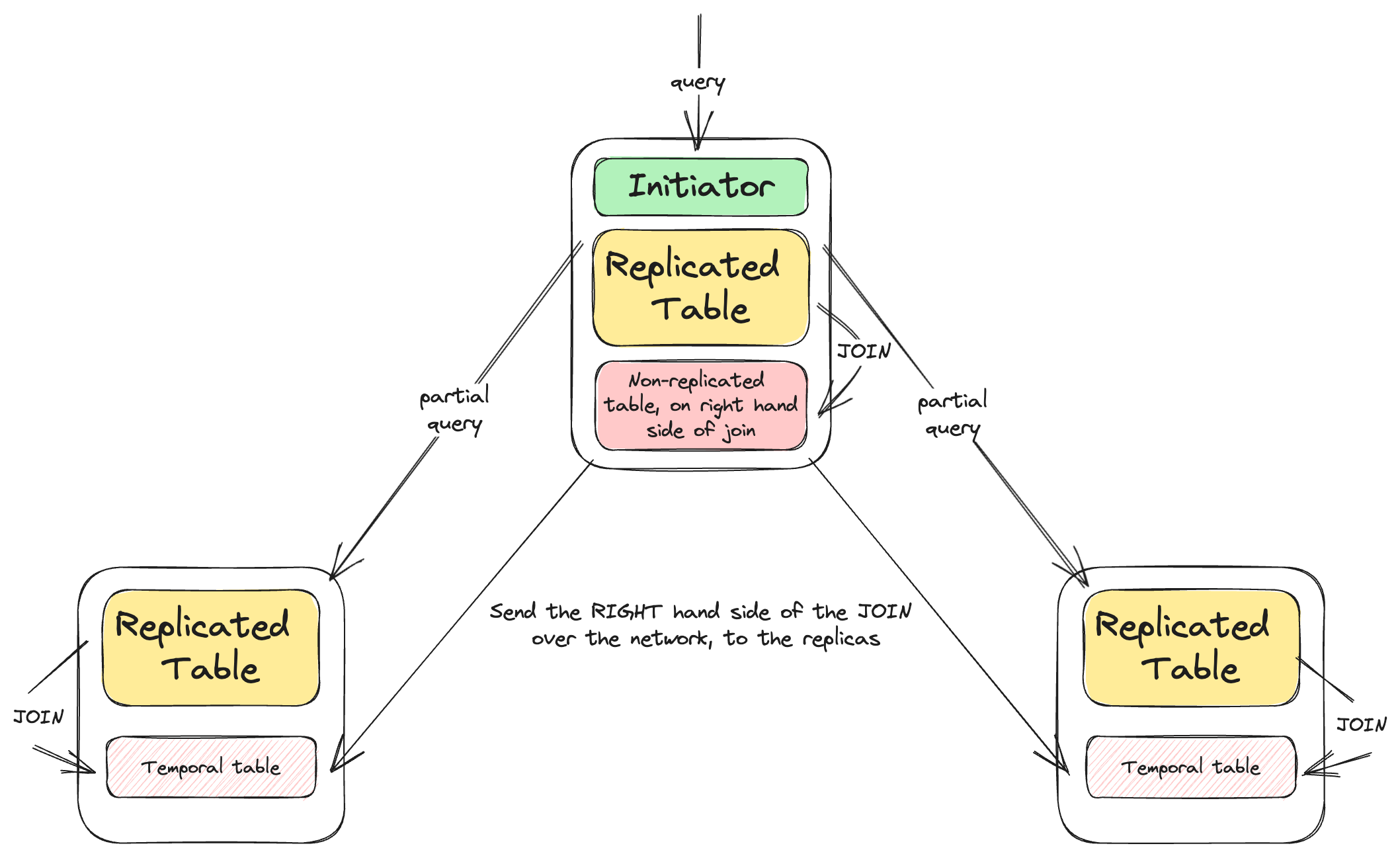

When you do a JOIN, you have two sets of data, known as the left and right hand side of the JOIN. If you distribute a JOIN query to multiple servers, each server needs to be able to read the data on both sides of the JOIN. The right hand side of a JOIN does not always have to be another distributed table (it might be the result of another query) and even if it is, there’s no guarantee that the servers with replicas of the left hand side data are also replicas of the right hand side data.

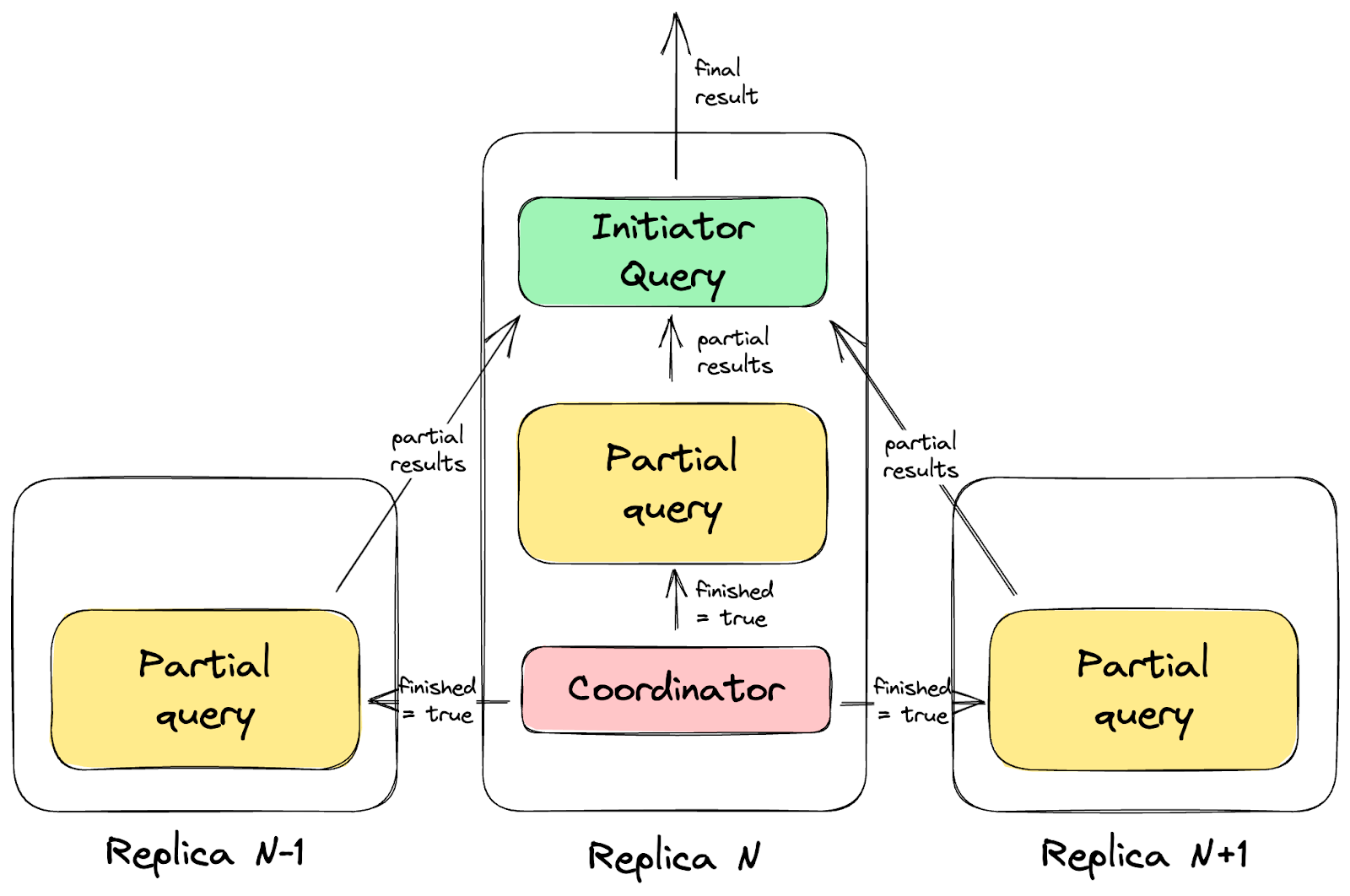

This means that there needs to be a mechanism to ensure that each replica has access to the right hand side data. In distributed SQL engines, this is typically handled by data shuffling. There’s a bunch of shuffling techniques that are appropriate in different scenarios, but we chose to use the most naive solution: broadcasting the right hand data to all replicas.

To support JOINs on parallel replicas, there needs to be a mechanism to allow each replica to access the right hand side of the JOIN. We used a naive version of data shuffling: broadcasting.

The idea for this solution is to take the right side part of the JOIN (if it’s a full table then you can ignore this step, but it’s usually a good idea to JOIN with conditions anyway), execute that subquery independently, and gather the result in a temporal table in the coordinator. The coordinator then broadcasts the result to all replicas and rewrites the initial query to replace the subquery with the temporal table.

You could find a better solution than broadcasting, but it has some benefits for this scope because it works regardless of JOIN type, sorting keys, operations done before or after the JOIN, etc. Certainly, other more advanced techniques would work better in some cases. For example, sharding the data based on the JOIN key would be a cleaner implementation. We can also make many improvements to this basic approach, such as sharing with each replica only the data that it needs to use or improving the coordination using the knowledge that a JOIN is taking place.

There are many ways we can improve upon this implementation. But it works, and that gives us a great starting point to test, benchmark, and iterate.

But, it works. And it gives us a great place to start. Now, we can use parallel replicas in more use cases, see what works and what doesn’t, what could be improved, and iterate. Having a suboptimal approach that works is much better than an ideal approach that doesn’t.

The most striking part about the pull request is that, if you don’t count tests, it's only around 30 lines of code. It took Raul around one week to create the pull request after the initial proposal upstream (and we had already been thinking about the problem before this). If you do the math, that’s less than 1 LOC/hour. But hey, productivity isn’t measured by the weight of the airplane.

What’s next?

We’re excited about the possibility of testing parallel replicas in ClickHouse for very large queries, including those with JOINs. We’ve already done some initial tests with a use case representative of some of our largest customers, and the results are promising.

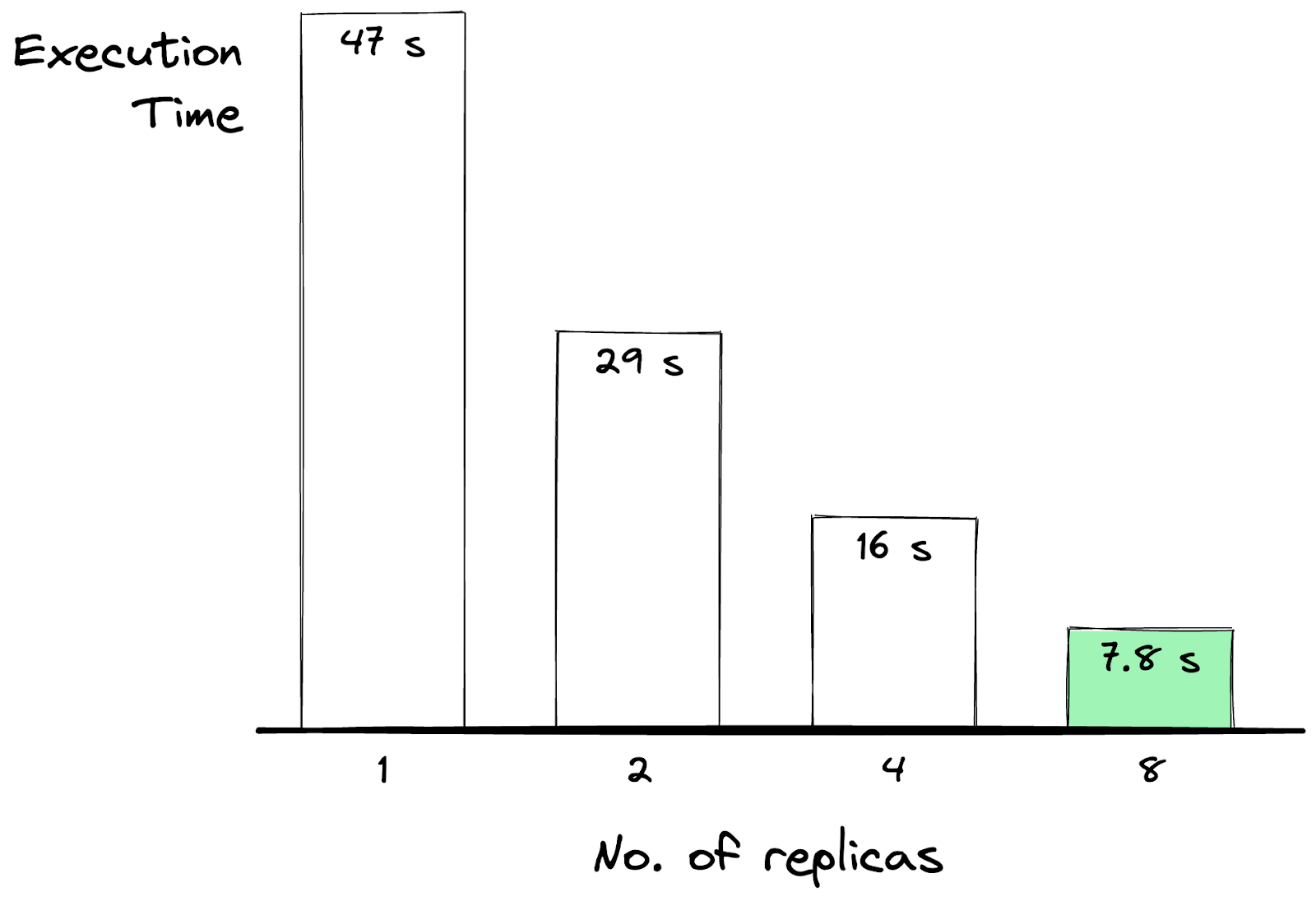

We reduced the time to run a very complex JOIN over 64B rows from 47 seconds to less than 8 using parallel replicas with JOIN support.

We have a complex query from one of our customers that does a JOIN between two very large tables, including window functions and grouping. The query processes 64 billion rows of data, almost 2.5 terabytes processed, using data stored in S3 with ZSTD (2) compression, with no caches. It takes 47 seconds to run this query without using parallel replicas. But with parallelization, we cut the execution time to less than 20% of that number (and that’s just the beginning):

- 1 replica (no parallel execution): 47s

- 2 replicas: 29s

- 4 replicas: 16s

- 8 replicas: 7.8s

Of course, nobody is getting any awards for running a query in 8 seconds on ClickHouse. For the types of real-time applications we aim to solve, that’s not going to cut it.

But these results show tremendous promise, and there’s technically nothing stopping us from scaling a ClickHouse cluster to hundreds of replicas if that’s what a customer use case demands.

There's nothing technically stopping us from scaling a ClickHouse cluster to run this query in less than a second, which is game changing.

Of course, parallel replicas aren’t a magic bullet for everything. In fact, for simple queries, the overhead of using them outweighs the performance gains of distributing the query.

But, we believe that they are going to be vital to performance at the next stage of scale for both Tinybird and anybody who is using ClickHouse as a distributed database. Right now, we're already enabling data engineers and developers to build sub-second APIs that process billions of rows of data. But with these changes, we're starting to explore a future with APIs that process trillions of rows at sub-second latency.

Right now, we're already enabling data engineers and developers to build sub-second APIs that process billions of rows of data. But with these changes, we're starting to explore a future with APIs that process trillions of rows at sub-second latency.

We’re excited to continue working on ClickHouse’s parallel replicas implementation to make JOIN support even more performant as we continue to harden our infrastructure for the next stages of scale we aim to achieve.

New to Tinybird? It's got all the speed of ClickHouse, but with an unmatched developer experience. If you want to try it out, you can sign up for free here. You don't have to put in a credit card, and there's no time limit for the free plan. If you get stuck or have questions, please join our Slack community. And if you'd like to solve really hard problems like this, we'd love to talk to you. You can check out our open positions here.