Hype v. Reality: 5 AI features that actually work in production

It's hard to sift through all the AI hype. Here are 5 AI features you can build that add immediate value to your app.

Every week, I hear developers (or marketing teams disguised as developers) talking about AI agents, MCP, RAG, "next-gen" models, and a dozen other frameworks and paradigms. The AI ecosystem is turning into an overwhelmingly fragmented space where it’s not only hard to keep up, but also hard to know the best approach for your real use case.

Meanwhile, there’s another, much quieter group of people: AI developers who are focused on building production-ready AI features.

In this blog post, you won't find lofty explanations of AI concepts, but rather practical and straightforward recipes that you can use to build AI features that actually work in prod.

All 5 features below, I've implemented myself.

1. Vector search

Vector search is a technique used to search for similar items (text, images, audio, etc) in a database.

I found this explanation very intuitive for understanding the concept.

To implement vector search, you need:

- An API to calculate embeddings for your data, ideally on ingestion or before. An embedding is a numerical representation of your data. Usually, it's a high-dimensional fixed-length vector that captures relationships and meaning.

- A database to store your data and its embeddings, optionally indexed, with a vector search engine.

- A vector search API to transform your users' queries into an embedding and search the database.

Practical example: search for a product on an e-commerce site

- Pick all the product attributes you might want to search by and concatenate them into a single string.

- Calculate an embedding for that string.

- Store the embedding in a vector database.

- When a user searches for a product, transform the user query into an embedding.

- Search the vector database with the embedding and order by similarity (vector search matches by semantic meaning, not exact matching)

- Show a filtered table to the user.

A simple way to calculate 384-dimensional vector embeddings using all-MiniLM-L6-v2 Xenova model:

Of course, this is a simplified example. Embedding calculation requires taking into account things such as inference speed, domain and language support requirements, choosing a hosted or cloud text-to-embedding model, tracking usage, costs, performance, etc. That's why we still need software engineers, right?

Most OLTP/OLAP database systems have some kind of vector search support, and that’s usually a good starting point. Eventually, you can move to a specialized service if needed.

Embeddings storage

Depending on whether you are calculating embeddings on or after ingestion, you can model your schema in one of the following ways:

- Calculate embeddings on ingestion and store them in an

Array[Float32]column in your landing table. - Calculate embeddings after ingestion and store them in a dedicated table you then use only for vector search.

An example Tinybird data source to store embeddings:

Distance functions

For the actual vector search, you use distance functions. Each one of them has different properties, some are deterministic, others are not, some require normalized vectors, others don't, etc. The most widely used are cosineDistance and L2Distance.

A simple vector search query in Tinybird could look like this:

A fully working example

Here is a basic vector search API implementation. It receives a string as an input and returns an embedding (Array of floats) as an output. The embedding is then sent to this Tinybird API, which receives the embedding as a parameter and uses the cosineDistance function, ordering by similarity to return matches.

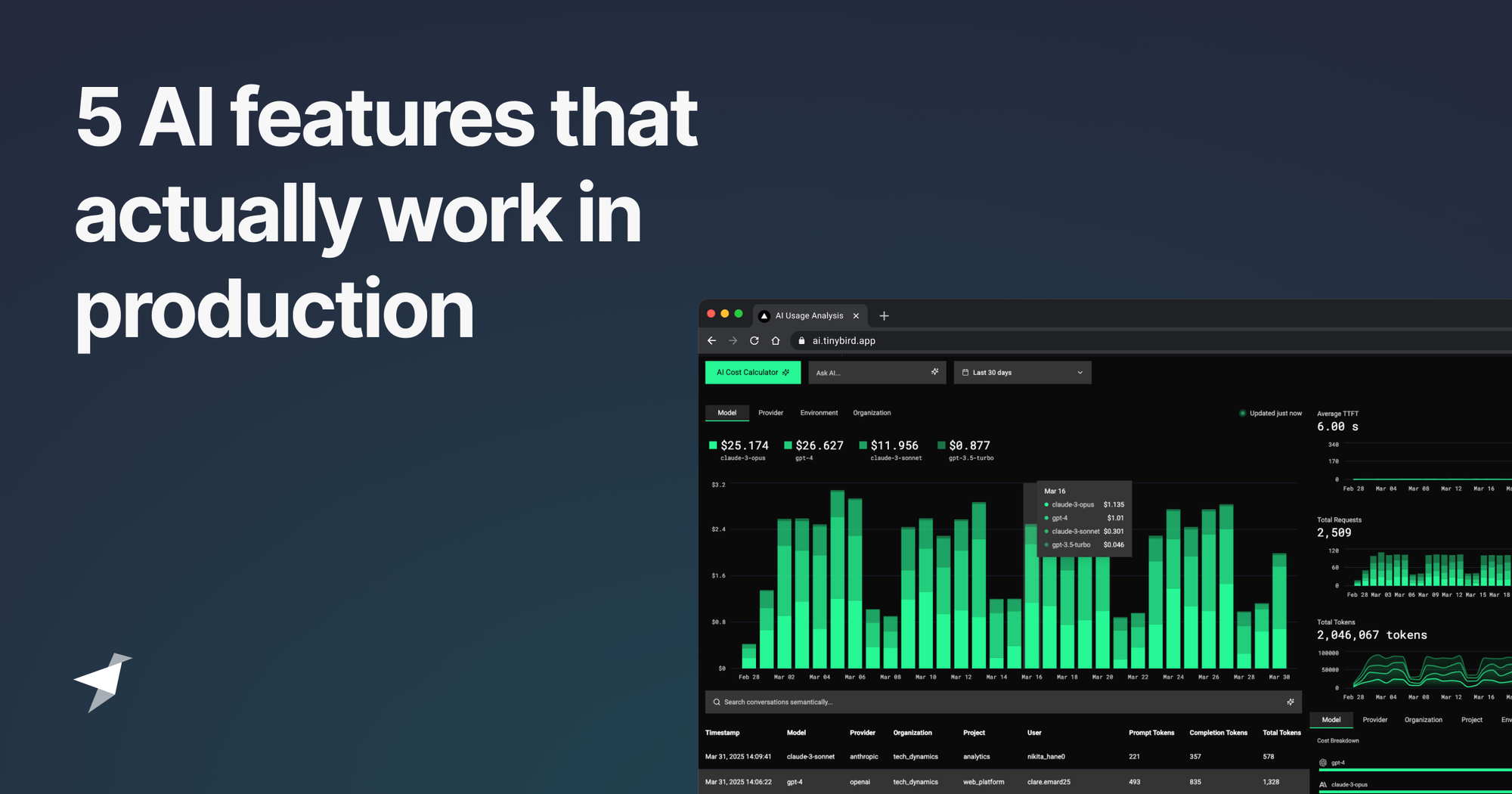

An example implementation of vector search in my demo AI Usage Analysis app.

2. Filter with AI

I learned this from Dub, which has an "Ask AI" feature:

Imagine you have a real-time dashboard where your users can filter and drill down over a set of dimensions (continent, country, browser, UTM params, etc.).

Historically, product teams have solved this by adding a "Filter by" section to the dashboard that allows users to choose a dimension and a value or set of values. A good example is the sidebar component from our own Logs Explorer.

But when you have dozens of dimensions, this can become impractical.

An "Ask AI" input search allows your users to filter the dashboard with a free-text filter. LLM models are good at this.

- Create an API that receives a dimension value, e.g.

newyork. This is the user input, so typos are fine. - Build context for the LLM. Get the list of dimensions and their unique values from your analytical APIs.

- Use an LLM query to transform the free-text into a structured output, e.g. JSON with a dict of dimension names and value for each dimension:

{“city”: “New York”} - Send the JSON to your analytical API.

https://api.tinybird.co/v0/pipes/sales_by_day.json?city=New%20York

- Show the filtered dashboard to the user.

- Optionally, use a cache to save repeated LLM calls.

This process works well with real-time dashboards, where you can provide context to the LLM query and then filter the whole dashboard in milliseconds.

A fully working example

This is a basic version of the Search API using the Vercel AI SDK generateObject call and OpenAI as LLM provider.

The system prompt contains a list of dimensions and unique values and the expected output schema:

The Tinybird API is parameterized to support filters by model, provider, environment, and more.

This is how the flow is implemented in the application:

Ask AI features convert free text input into structured parameters for visualization

3. Visualize with AI

Once you start using LLM queries for structured outputs, you see a lot of opportunities to use them for other features.

Instead of providing a predefined dashboard, for example, you can let your users ask AI to visualize the data the way they want ot.

I ran an experiment after seeing this tweet from Toby Pohlen, one of the founding members of xAI.

We have a similar feature on our API console. The CMD+K menu is actually connected to Grok and it allows you to get stuff done more quickly.

— Toby Pohlen (@TobyPhln) February 26, 2025

(video is sped up) https://t.co/YcdhSansAi pic.twitter.com/YmS6N36Gh7

The idea is simple: once you have built your analytical APIs, you can visualize them in a custom UX rather than the standard dashboard.

For this type of use case, it's very common to use a chat interface or a natural language query interface.

Most of your work consists of building a good system prompt tailored to your use case, gathering context, and fine-tuning.

- Create an API that receives a natural language query, e.g.

sales last week in new york stores, top 5 products. This is a user's input, so grammatically incorrect sentences are fine. - Build context for the LLM.

- Use an LLM query to transform the natural language into a structured output, e.g. a JSON with a dict of dimension names and a list of values for each dimension.

- Send the LLM output to a generic visualization web component connected to your analytical API.

- Optionally, add a feature to save free-text queries for later use.

As opposed to the "Filter with AI" feature, this kind of feature (besides being useful for exploratory analysis) is also good for product engineers to discover user patterns and intents and build new features out of them.

My recommendation in this case is to make sure you instrument your LLM queries to detect usage patterns and errors and optimize the user experience.

A fully working example

I built a project to analyze LLM usage and costs and a predefined dashboard using Tinybird. Now I want the users to explore the data using a free text search input, so they can run queries such as:

I built a Tinybird pipe that can answer that type of question. I have the API, it's just a matter of translating the free text search query into structured output parameters for the pipe.

The important part is building a comprehensive system prompt (and, of course, instrumenting it so I can fine-tune it later).

The LLM needs to know the schema of the structured output, so we can provide it statically like this.

The LLM needs to know Anthropic corresponds to a provider parameter in the Tinybird API. In this case, we gather all this context by running a query on the data in Tinybird. For that, we use the Tinybird SQL API and a query to get unique values for the dimensions.

Finally, there are other kinds of translations that need to be done, for instance, last week needs to be mapped to a date range using start_date and end_date parameters.

This is a basic version of the Search API using the Vercel AI SDK generateObject call and OpenAI as LLM provider.

This API can be generalized by gathering context and data from Tinybird dynamically. For instance, instead of statically defining the response schema, we could just get the pipe definition dynamically:

This way, a basic system prompt can be enriched with dimensions and unique values, the output schema definition, the pipe endpoints catalog, and some basic definitions to translate dates and common expressions.

Bye, BI 👋

— alrocar 🥘 (@alrocar) March 20, 2025

Vibe coded a tiny NextJS component that translates text into real-time charts

Built with @aisdk, @tremorlabs and @tinybirdco

I use it to calculate our LLM usage costs, but it's easy to generalize to any data source pic.twitter.com/W0WYa8ogL8

4. Auto-fix with AI

This is a feature that you can find in many developer tools (for instance, Tinybird or Cursor). It's a simple QoL feature and the most basic “AI agent”.

When you are building with a devtool you make mistakes all the time. Those mistakes can be syntax errors, missing imports, etc. You get a red error message, read it, and fix it.

Traditionally, you developed your debugging skills, learned from those errors, and fixed them on your own. Maybe you asked for help on a forum or a chat, read the docs, or asked a colleague.

Today, you just throw the error to an LLM, and it fixes it for you.

“But LLM models are not deterministic, and they can give you the wrong answer!!”. Yes, and that leads to the first principle of AI agents: make the LLM evaluate if the answer is correct.

Consider the Tinybird Playground. It's a feature we have where you can build and test your SQL queries. It's very typical to introduce syntax errors when you are building your query, and sometimes they are not obvious to fix. An auto-fix feature helps you fix them without slowing you down.

How to build an auto-fix feature:

- Create an API that receives an error message and the SQL.

- Build context for the LLM: The data sources schemas, a sample of the data, an llms.txt file with the docs, etc.

- Use an LLM query to fix the error.

- Re-run the original API request with the fixed SQL.

- If it fails, repeat the process, adding the new error to the context.

- When it succeeds, show the result to the user.

With the rise of MCP, you can the LLM model access to a set of tools, in this case your own API, so it autonomously decides when the answer is correct, gathers real-time context, etc.

5. Explain with AI

State-of-the-art LLMs are sometimes terrible at trivial tasks but great at explaining complex concepts, or describing your own data, or gathering multiple sources of information.

An example: In most technical companies, you have:

- Public product documentation

- API, CLI, SDKs references

- Internal knowledge base

- Internal documentation and wikis

- Issue trackers, PRs, and PRDs

- Slack channels, internal discussions, etc.

And then there's a single source of truth for technical questions: the code.

When integrated to be consumed by an LLM, all this info can be used to answer most of the support requests from your users.

At Tinybird, we've been testing internally an in-house AI agent that aggregates all the information above and answers support questions about the codebase. In this case, the feature is delivered as a Slack chatbot, and it significantly reduces the internal support required from engineers to user-facing teams like customer success and "devrel".

A couple of related QoL tips

A small related QoL tip consists of making your public docs easily digestible by LLMs. Everyone is using llms.txt, which is a good way for your documentation to be indexed by LLMs or integrated into development tools such as Cursor (or your own application).

And another one: send your docs directly to ChatGPT so your users can ask any question. For example, I found myself today wanting to send a Google Doc + comments to an LLM to integrate some peers’ comments into this blog post xD

✨ You can now copy every Tinybird doc as Markdown, see the source, or send it to ChatGPT.

— Tinybird (@tinybirdco) March 18, 2025

This is the same behavior as the one recently introduced by Stripe Docs.

🧑🍳😘 pic.twitter.com/Ns3nTuu8X1

Closing thoughts

If anybody is shilling AI best practices, take it with a grain of salt. We're building the car as we drive it, and everybody is learning.

Instead of getting lost in the hype, start building features. These are 5 features you can build pretty quickly, and they actually give your users a lot of value.

If you want to see an example implementation for each one, you can view the demo of my AI Usage Analysis app, which gives detailed metrics on how my app uses LLM calls. The code for it is here. All these features were built using Tinybird as the analytics backend.