Changelog #17: Guided tour, Kafka ingestion improvements and more

Lots has been happening at Tinybird. The team is growing fast and everybody is working to improve the developer experience. Here are some recent features and updates that you may have missed.

Lots has been happening at Tinybird. The team is growing fast and everybody is working to improve the developer experience. Here are some recent features and updates that you may have missed.

There is a strong focus on improvements for working with Kafka streams and NDJSON, the format where each individual row is any valid JSON text and each row is delimited with a newline character. Watch out for product announcements coming soon.

Guidance and flexible working

When you first sign up to Tinybird you can choose your region from the current options of US East or Europe.

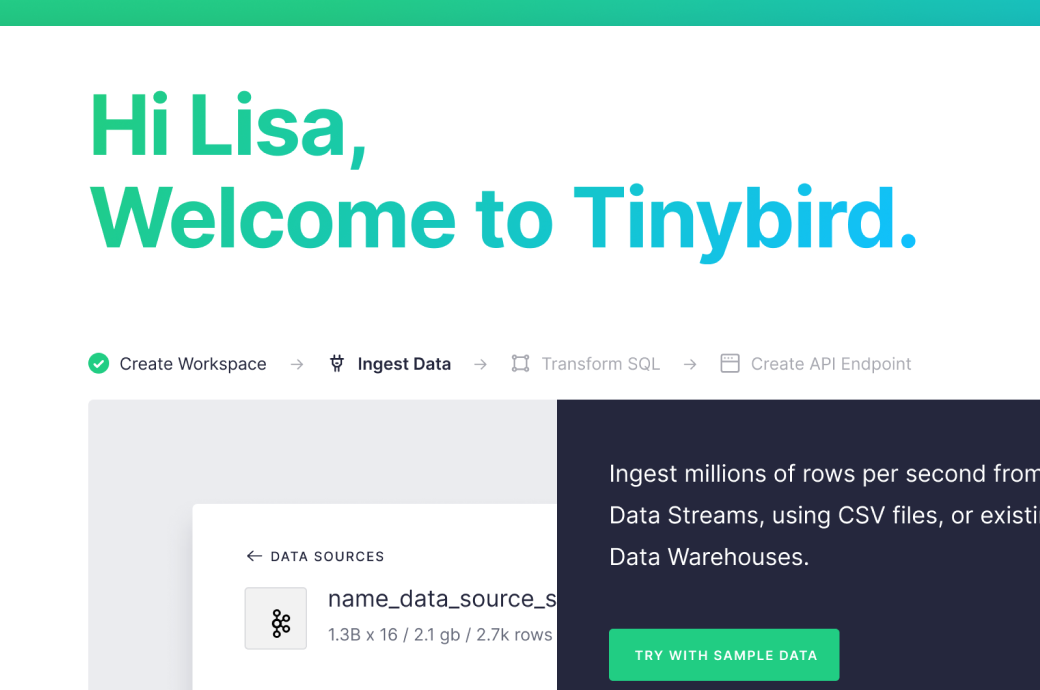

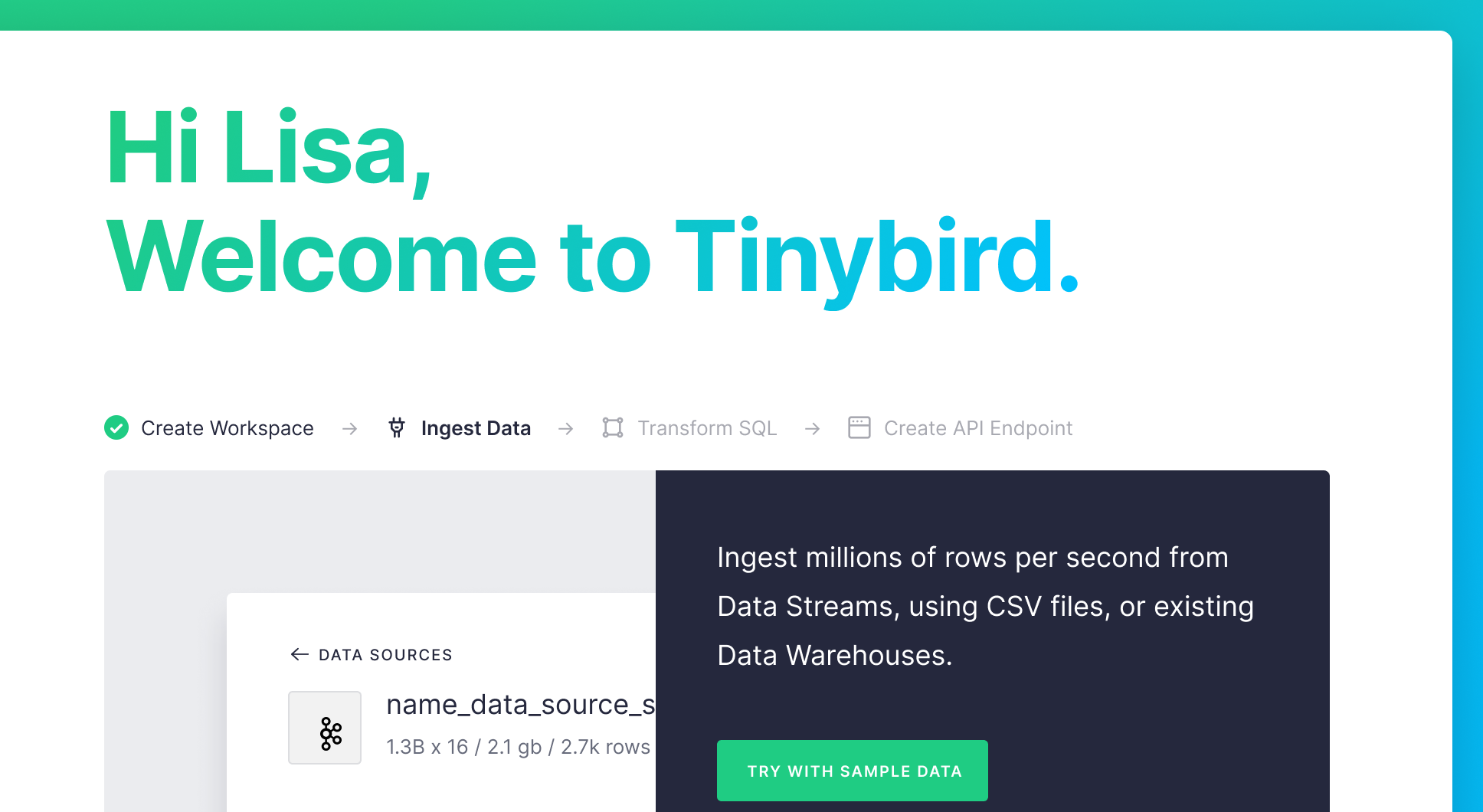

A guided tour now walks you through the four steps to success:

- create a Workspace

- ingest data

- transform SQL

- create API endpoint.

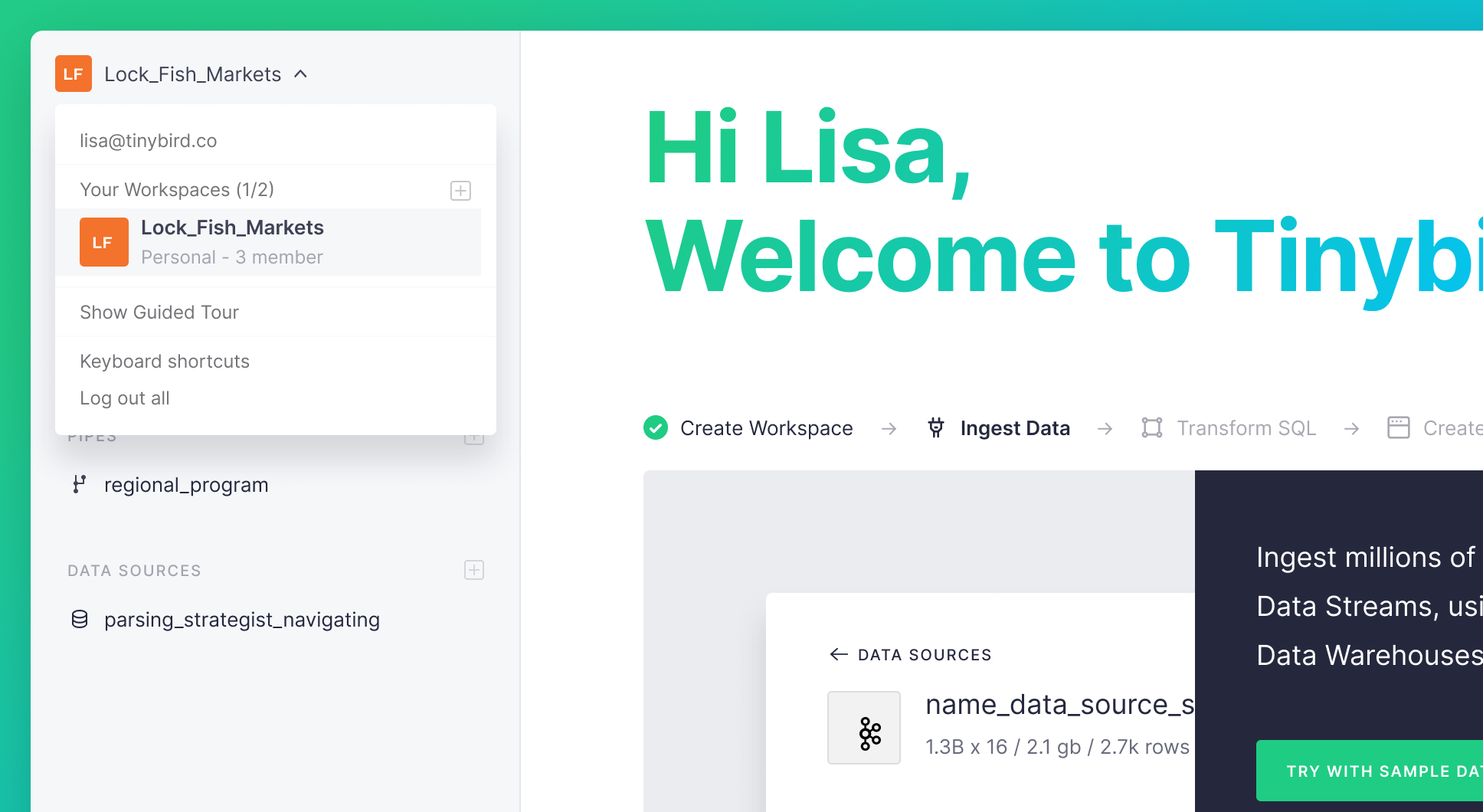

The guide will be displayed automatically when you first create a Workspace. Anytime you want to see it again, just click on “Show guided tour” on the user dropdown.

Directly in a new Workspace you are now guided through the creation of a pipe and the ingestion of data from a local file, a remote URL or Kafka. You no longer need to read the guides and docs to get started.

Teamwork matters, so share your Workspaces with your colleagues to work together on the same project and share Data Sources across Workspaces so that you just ingest and transform data once and then make it available to other teams. In practice, this means your Data Engineers can manage the data and keep it safe while other teams use the data you give them access to for their projects.

Improving ingestion from Kafka

Ingestion from Kafka is out of beta and available for all.

Custom code on the Kafka agents is now being used to deserialize JSON. This optimisation makes ingestion from Kafka faster and easier to scale.

There is documentation for the kafka_ops_log service Data Source, which contains information on all the operations on your Kafka Data Sources.

Responding to user feedback to improve the experience

We’ve speeded up the loading of your Workspace, even if it contains hundreds of Data Sources and Pipes, added lots of enhancements to the Data flow and improved usability of modals after secondary actions.

New API features

The Data Sources API now supports NDJSON. Have a look at the guide and docs on ingesting NDJSON.

You can use the new v0/analyze API to guess the file format, schema, columns, types, nullables and JSONPaths (in the case of NDJSON paths). This will help you when creating Data Sources.

CLI updates

Check out the latest command-line updates. Highlights include:

- using environment variables in a template by having a statement like this

INCLUDE "includes/${env}_secrets.incl"and callingtb env=test tb push file.datasource - having a workspace as a dependency

tb push –workspace push,pullandappendnow work with NDJSON- supporting

–tokenand–hostparameters for auth and workspace commands. This is useful when you want to automate commands via shell scripts or similar and you want to pass those parameters via environment variables without having to dotb authwith the token prompt. For example,tb –token <token> workspace lswill list the workspaces for the token passed as a parameter.

ClickHouse

Not only are we improving Tinybird but we are also contributing to ClickHouse in the process, specially around performance. Some examples:

- a ~1.4x performance improvement in Clickhouse query parsing speed

- performance improvements to avg and sumCount aggregation functions

- performance boost for count over nullable columns

- improve the max_execution_time checks so that timeouts are better respected

Community Slack

Tinybird now has a community Slack! This is the place to go if you have any questions, doubts or just want to tell everyone what you are doing with Tinybird. Come by and say hello!