Create a Static Application to Analyze +50M Github Events

Creating applications just using HTML, javascript and CSS is easier than ever with new frameworks and platforms like Zeit. Let see how to create a simple analytics application that queries 50M rows in real time without backend.

Static applications have a lot of advantages: they run fully on the browser, you don't need a full stack developer to create an app, they are fast, scale pretty well and are easy to deploy with the help of tools like Zeit and it's easy to build more complex apps with frameworks like next.js or Gatsby.

The main problem with this kind of apps is you often need to get data from different services. Nowadays there are services for almost everything and you can talk to them directly from your static app or through a small backend or maybe with a bunch of lambda functions (also provided by Zeit). Ideally the less pieces between your app and the services providing the data the better, less latency, less points of failure, less things to maintain.

Tinybird is one of those services: it provides a way to query large amounts of data in real time. In this case we are building a static application that shows some stats over more than 50M github events from the last January.

Let's start, we assume you have Zeit and Tinybird accounts.

Loading raw events from github data

Github provides access to public raw events produced by their platform events data in JSON format, one file per hour. We will load data for January 2020 as a example.

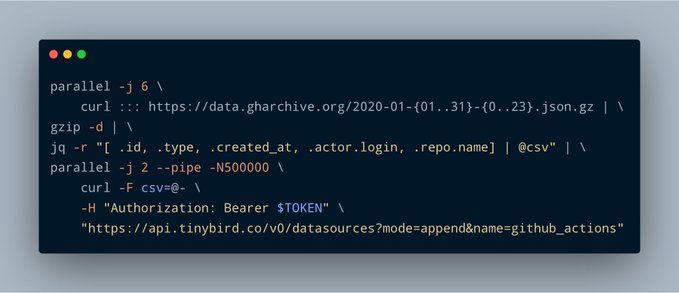

Before loading it we need to select the data from the JSON events we want to analyze and tranform to CSV. In this case we just need to pipe data though jq to transform to CSV and push to Tinybird using the API. We could load all the events but let's keep only some of them for the sake of simplicity.

Let me explain the command.

First, use parallel to load using 6 curl workers. Notice the bash expansion trick to load all the hours for the whole month:

After that, we decompress with gzip -d. Then, using jq, we select the fields we want to analyze from the JSON events and transform to CSV. We batch the data using GNU's parallel using two workers, -N500000 means we are sending 0.5 million rows batches. Finally we pipe those batches to curl using multipart upload to push to Tinybird. Notice we use the append mode and the Data Source with name github_actions. You don't need to set an schema, Tinybird guesses everything for you.

That ${TB_IMPORT_TOKEN} variable is a token with import permissions, you can grab it from the dashboard of your Tinybird account.

Creating the static aplication

To show how to query that data, we created a pretty simple Vue application. It could be done using any other framework, React, Angular, or just vanilla javascript. The application will show top users and repositories for each different event. Obviously, a real application would have a build pipeline, testing and so on, for this example a simple HTML file is fine.

Fetching the whole dataset (1.5Gb compressed) would be impossible, it would crash the browser. So, in this case, we need to aggregate the data. Tinybird provides APIs to fetch the data in different forms, in the case the app fetches aggregated data in JSON format using the query API, using plain SQL.

If you don't like SQL, it's also possible to create an endpoint (/v0/pipes/github_actions_tops.json) using Tinybird's UI.

For this example, let's just use the SQL interface.

The application is really simple:

- when the application starts, the different events are requested using

tinyb.querymethod from tinybird.js

- when the user selects one type of event, the app fetches top users, top repos and event count:

This is the code for the full app (see the complete source code on github)

The deploy

This is as easy as executing now command thanks to Zeit:

You can run the application here.

Does my data need to be public?

In this particular case github data is public so to keep the data private makes no sense but it's possible to use access tokens. In the source code when tinybird object is created a token is passed as an argument. That token is hardcoded in the HTML in this case but it could be provided by a backend behind a login.

You could generate tokens for each user and even add restrictions like filtering only one kind of event or date range.

Sending raw SQL from the client application is not insecure!

If you allow to send queries like DROP TABLE or DELETE FROM, it'd be a very big issue, but that's not the case. We only run the queries that are accepted by the token in use, so you can't remove data or access data you don't have access to.

You could also limit the SQL usage and generate regular endpoints that accepts input as parameters, like:

How fast are the queries?

The average query time on the small account we use in this example is about 300ms. Time is reported on headers and also in the JSON payload. There are no intermediate caches involved. No other optimizations like indices are created.

We are opening new accounts, request early access.