We made an open source LLM Performance Tracker

You can't just build AI features, you have to operate them in production, which means observability. Here's an open source tool to watch your LLMs in real-time.

Building AI features can feel like flying a fighter jet through fog - the capabilities are incredible, but the visibility is limited. LLMs aren't like APIs - they give stochastic responses with non-deterministic costs, which makes observability very important.

While building AI features is cool, we can't just build them. We have to operate them in production, track performance, analyze metrics, and iterate.

AI engineers and AI-native founders need better tools for LLM observability, and there are certainly companies being built (e.g. Dawn) to solve this problem. In the meantime, we've created an open source utility to help those building AI features keep tabs on their models.

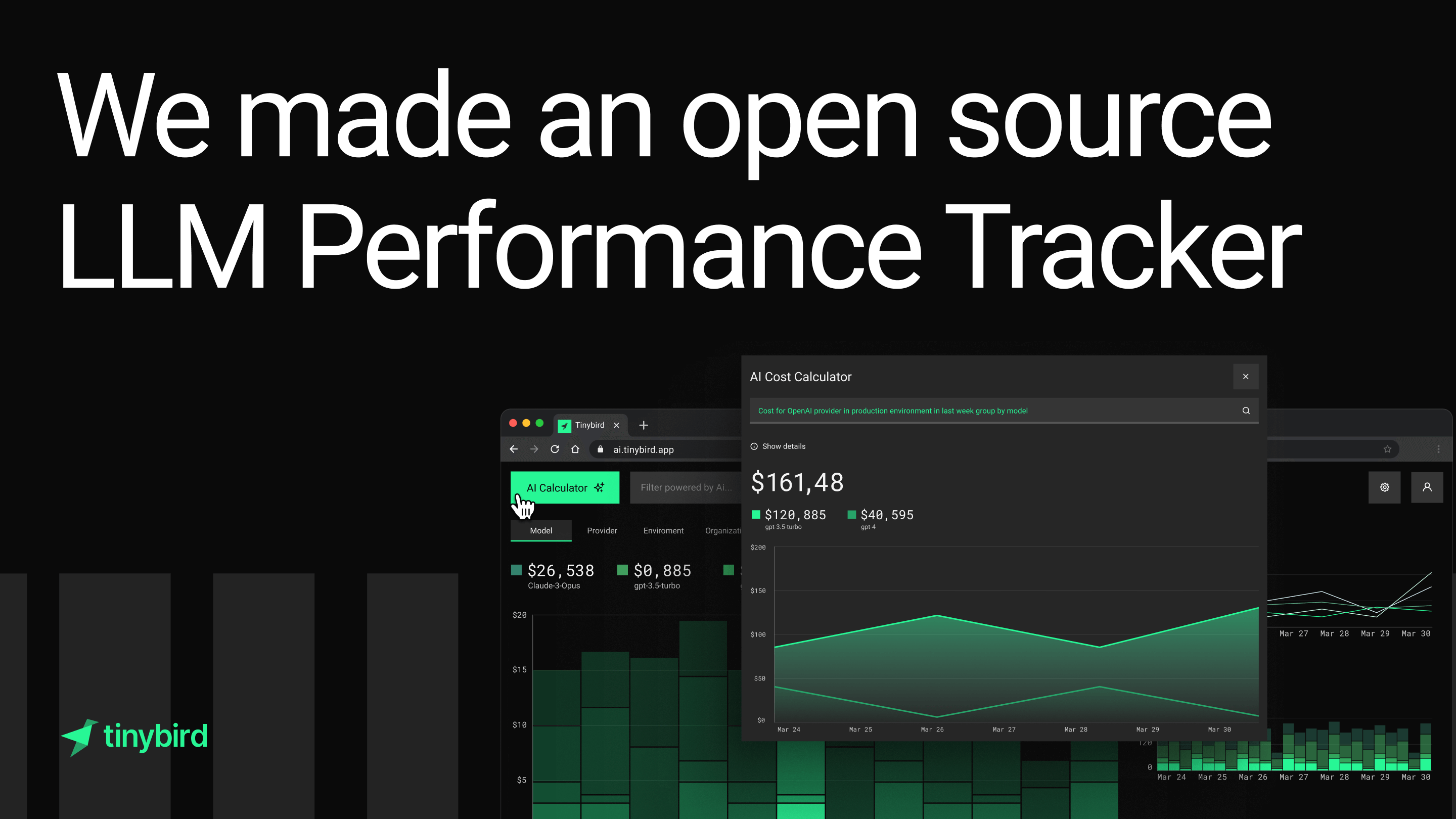

Introducing the LLM Performance Tracker

The LLM Performance Tracker helps AI engineers and software developers track LLM performance across their app stack. It's a Next app + Tinybird backend that can capture millions of LLM call traces and visualize key performance metrics like cost, time-to-first-token, total requests/tokens, etc., in real-time, with multi-dimensional filtering and drilldown. Here's a demo.

We built the LLM Performance Tracker to be immediately deployable so you can instrument your AI app and answer questions like:

- Are there usage spikes or users consuming tokens disproportionately?

- Which models give me the best bang for my buck?

- Are certain providers or models consistently less successful?

- Which prompts or user inputs lead to failed completions?

- How does adjusting the "temperature" parameter affect response quality and costs?

- You name it

The project is open source, and we encourage you to modify it to meet your specific needs. We've even included support for multi-tenancy using Clerk JWTs in case you want to borrow the components and integrate them into your app for user-facing features.

How we built a multi-tenant LLM performance tracker

In terms of tools, infrastructure, and workflow, building an analytics dashboard for AI features is pretty similar to building analytics for any product feature.

Our app uses the following tech stack:

- Next.js: App/Dashboard

- Tremor: Charts

- Vercel AI SDK + OpenAI: AI Features

- Vercel: Deployment

- Clerk: Authentication and user management

- Tinybird: Instrumentation + Analytics

Instrumentation

You start by instrumenting your LLM calls. At Tinybird, we send LiteLLM events (Python) and instrument Vercel AI SDK calls (TypeScript) to send the data to Tinybird, but you can use your own LLM provider and easily send thousands of LLM call events per second to Tinybird.

Data storage

The Tinybird project to calculate metrics is simple: A single data source to store LLM call events and just three APIs built from Tinybird pipes to calculate and expose the LLM metrics for the dashboards.

All events land in a single data source called llm_events (here's the table schema). It contains metrics and metadata you would find in typical LLM providers' APIs.

Additionally, you can store custom metadata of your domain and multi-tenancy support: organization, project, environment, and user. The schema includes chat_id and message_id for tracing. Optionally, you can extend your LLM provider API to fully support traces.

APIs

The APIs are basic SQL queries to the main llm_events table, with dynamic query parameters to support multiple visualizations:

- The main time series chart visualizes costs by various dimensions using the

llm_usageAPI. - Three spark charts give a one-shot view of the main metrics: time-to-first-token (TTFT), total tokens, and total requests. These all use the same

llm_usageAPI. - A data table and detail view to show individual LLM calls, powered by the

llm_messagesAPI. - A bar list to show total cost by model, provider, organization, project, user, and environment, that can be used to filter the dashboard. It uses the

generic_counterAPI.

By the way, we built the basic version of the entire project from a one-shot prompt:

AI features

The app showcases some nice AI features using the Vercel AI SDK and Tinybird's vector search functionality. These are easy-to-build features that add value to real-time applications such as this. You can learn how we built these AI features in this blog post.

Multi-tenancy

Finally, the app uses Clerk to manage user access, authentication, and multi-tenant dashboards. Clerk provides a JWT token based on their Tinybird JWT template. The JWT includes claims that filter requests to the APIs by organization (or any other of your domain/tenant dimensions), defines rate limits, and even controls fine-grained permissions to specific resources. Read this guide to learn more about how to quickly build multi-tenant analytics APIs with Clerk and Tinybird.

Alerting

This app template is just a dashboard, but it's easy to extend the APIs for alerting using Grafana, Datadog, or other observability platforms. You can easily tweak the endpoints to produce a response in Prometheus format to integrate with your o11y platform and generate alerts on LLM usage anomalies, as you’d do with any other application.

How to make the LLM Performance Tracker your own

The app is open source, so you can fork it and modify it to suit your needs. We welcome PRs!

Here's our recommended workflow:

Start your local development environment:

Fork the repo and then start the local Next and Tinybird servers to run the full app locally:

Configure your environment variables:

Makes changes

You can update the resources manually or use tb create --prompt to have Tinybird update or create new data sources or APIs.

Some ideas for ways you could improve or extend the template:

- Add new dimensions specific to your use case. For example, add filtering by feature flag:

- Create materialized views to rollup aggregations and show dynamic time groupings in the time series charts:

- Create a new API to visualize conversation success or evals aggregation over time:

There are many more things you can add. (By the way, Tinybird will automatically create a rules file for Cursor or Windsurf, so you can easily prompt your IDE to update the Next app based on changes you make to your Tinybird endpoints.)

Validate your changes

You can create mock data fixtures to see how your new app behaves locally:

You can also create unit tests for your endpoints with tb test create <endpoint name

Deploy

When you are ready, just commit the changes and push to your git remote (make sure to add Tinybird host and token to your GitHub actions secrets so Tinybird can run the supplied CI/CD workflows to test and deploy). Alternatively, you can deploy the Tinybird app manually with tb --cloud deploy.

And if you need to fully own your AI analytics, you can use your own infra.

How to deploy the LLM Performance Tracker

Want to start using it now to monitor your AI app? Just fork the repo and deploy to Vercel and Tinybird, then add a few lines of code to instrument your app to send events to Tinybird. Here are guides for LiteLLM and Vercel AI SDK providers. If you have a different provider and need support, come find us in our Slack community.

What if I need something more advanced?

The LLM Performance Tracker is designed to be simple, lightweight, and extensible.

If you need more advanced LLM observability features out of the box, check out Dawn - which is like Sentry for AI products.

They provide incredibly powerful monitoring of issues and user intents in your AI application.

(And they also build their timeseries/aggregation features with Tinybird 💪)