Log Analytics: how to identify trends and correlations that Log Analysis tools cannot

Introducing the Tinybird Log Analytics Starter Kit. It’s an open-source template to help you take your Log Analysis to the next level using analytics that help you examine your software logs to identify trends, patterns, and more. It’s free and available today.

Developers make software. Software generates logs. Developers use logs to make better software. This is the way.

But as your software gets bigger, it generates more logs than you can reasonably analyze on your own. So you try to find some kind of Log Analysis tool to search through the noise. There are a lot of Log Analysis tools out there. Some are great, and some are not.

But even the great ones tend to be very opinionated; they assume that your software is software just like any other software, but that’s just not the case anymore. The way you debug your Node.js API is far different than how you debug your new kernel driver.

On top of that, most Log Analysis tools really focus on free text search. They let you filter through heaps of logs to find specific occurrences of errors, to track down the needle in the haystack. Their analytical capabilities tend to be quite lacking, especially at scale.

This is the essential difference between Log Analysis and Log Analytics:

- Log Analysis: helps you find the needle in the haystack when you know where to look

- Log Analytics: helps identify trends, patterns, and so on to guide you to look in the right place

Moreover, finding a single log within a heap of logs doesn’t always answer the important questions that developers have; questions like:

- How many times have we seen this error before?

- Which functions are the most common source of this error?

- Has the frequency of this error changed over time, or between releases?

- Do particular devices or referrers or CPU architectures or operating systems result in higher error rates?

These questions are left unanswered by most Log Analysis tools. Unfortunately, the state of the art in Log Analysis tools doesn’t do much to help you answer these questions. If you want an analytics tool to answer these questions, you’ll have to build it yourself.

But Log Analytics isn’t as easy as it should be for developers. It generally requires infrastructure that, until now, didn’t exist in a form available to most developers. It also requires skills that aren’t table stakes for most developers, even the hardcore backenders.

From an infrastructure point of view, collecting logs involves some serious scale. We’re talking hundreds of millions to billions of rows for even moderately sized projects. A normal database just isn’t going to handle that scale. Have you tried running analytics over hundreds of millions of rows in Postgres? It’s not pretty, and it’s not what Postgres was built for. Many developers would have to step outside of their RDBMS comfort zones to be able to scale their Log Analytics.

Even after you’ve solved your infrastructure dilemma, it’s not just about the database, but all the things around it. You could probably do without writing yet another ORM and HTTP API layer to ingest or export data from a database, especially if it’s a database you’re less comfortable with (see above). You’d probably love to avoid configuring and managing yet another nginx web server, too.

Even after infrastructure and development challenges are solved, defining metrics is haaard, especially when SQL and data analytics aren’t your core skillset. Most databases are built for hardcore DBAs who love a good sub-query or two.

Finally, the real power of Log Analytics isn’t reactive analysis. It’s proactive analysis, where you examine the performance of your systems in realtime, and identify and address problems before they become catastrophic.

Late last year we launched a Web Analytics Starter Kit to give website builders a simple, easy-to-deploy template for tracking and analyzing traffic on their websites.

Since then, it’s been deployed hundreds of times by all types of developers, in all sizes of organizations, all around the world. Complete SaaS products have been built on top of it. Heck, we’re even using it to get analytics on our own website.

I recently migrated @dubdotsh's time-series analytics data from Redis to @tinybirdco.

— Steven Tey (@steventey) January 3, 2023

The results? ~100x reduction in request bandwidth (and a similar reduction in request latency)

Here's why Tinybird is the 🐐 database solution for time-series data 📊👇 pic.twitter.com/mBaoqu9Poh

Suffice to say, developers seem to like it. So we’re running it back - with a twist.

Log Analytics just got easier for developers.

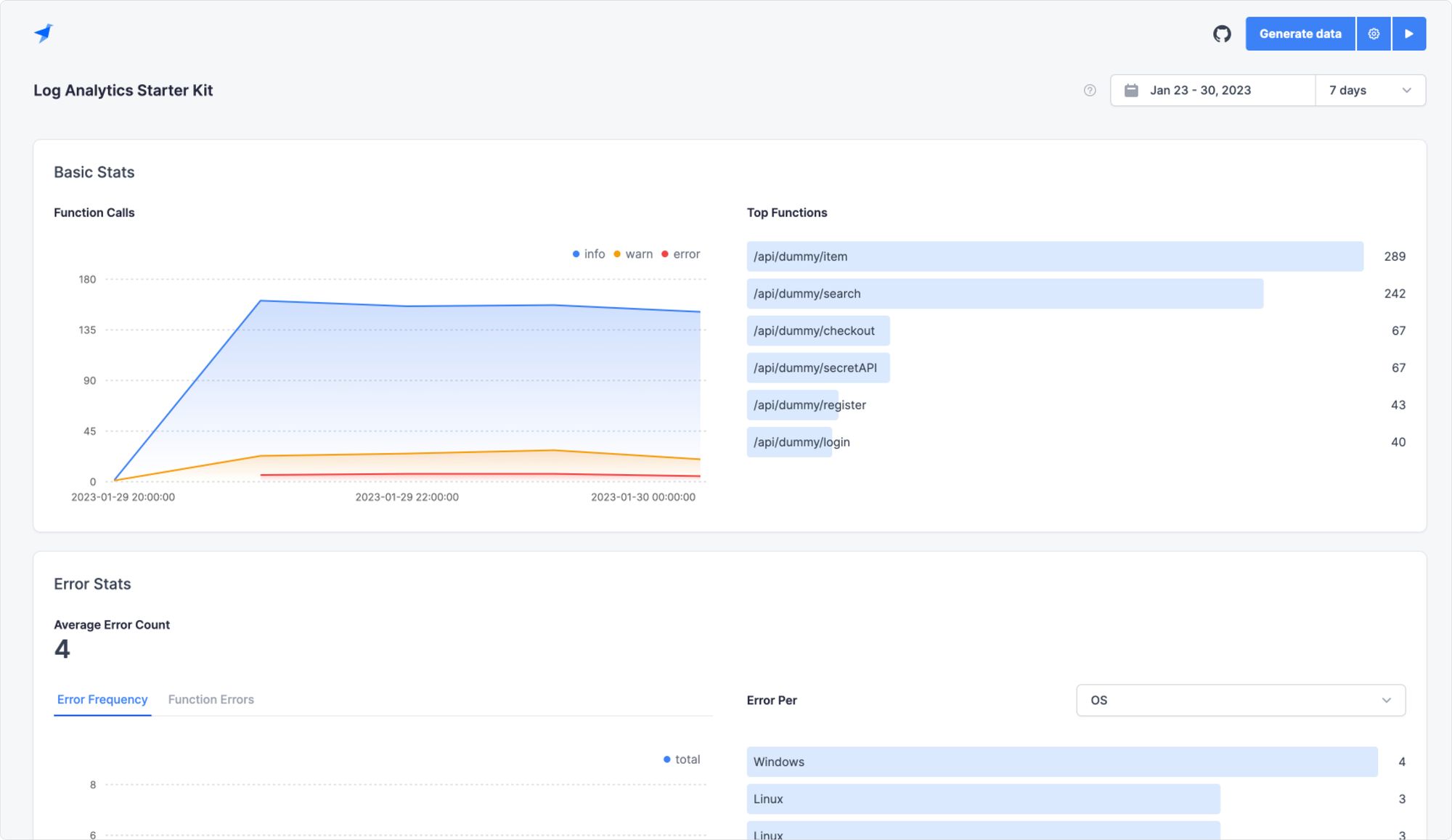

Today, we’re releasing the Tinybird Log Analytics Starter Kit, an open-source template for building your own Log Analytics over Tinybird. It’s language-agnostic, deploys in minutes, and allows any developer to build deep, meaningful metrics and visualize them in a beautiful dashboard with skills they already have.

You can use it to kickstart your Log Analytics and start building better software.

The Log Analytics Starter Kit is built on Tinybird, which means you get several advantages straight away:

- Tinybird is scalable. As your application grows in complexity and popularity, so does the quantity of your logs. Tinybird is a serverless managed service that scales with you as you grow, so you never have to worry about out-sizing your Log Analytics.

- It’s easy to use. We focus on the developer experience from the get-go, meaning you can get up and running with Tinybird in seconds with just a handful of clicks.

- We make SQL easy. Tinybird uses the SQL you already know and love, and we provide a beautiful notebook-like environment for writing your queries.

- Tinybird is realtime. We process data instantly, at scale, and expose that data as APIs so that your applications and custom dashboards can programmatically respond to that information in realtime as well.

Log Analytics helps your development team build custom metrics over huge volumes of log data so they can be more:

- Efficient in finding bugs and defects in your applications and systems

- Productive when managing the development process

- Proactive in maintaining the health of your applications and systems

Read on for more information on why we built the Log Analytics Starter Kit and what you can do with it. You can also start developing with Tinybird for free today (there’s even a special offer for new customers below!). If you’re trying to build analytics on streaming data, such as logs, you’ll likely find Tinybird useful, so feel free to give it a try. Finally, if you are itching to learn even more, you can join our Developer Advocates for a Live Coding Stream on building Log Analytics using Tinybird on March 1, 2023 at 1700 GMT / 12pm EST / 9am PST.

The state of Log Analysis today

Of all signals used in observability systems today, logging has perhaps the deepest and richest history. Nearly all programming languages have built-in logging capabilities or are associated with popular logging libraries. While many have tried, most have failed to “revolutionize” logging. It is what it is and always has been: a motley collection of error messages, statuses, and random information, all timestamped and streamed from your custom code, included libraries, or system infrastructure.

As such, logging systems tend to be weakly integrated with observability infrastructure. Whereas metrics and traces tend to be structured data, logs tend to be more unstructured. Getting insight into logs is difficult without tediously poring over them, line by line. Initiatives like Open Telemetry attempt to put structure around this information, but it is nonetheless a very difficult problem to solve.

At Tinybird, we know this all too well, as we run infrastructure at scale. And, of course, we do a ton of Log Analysis to diagnose errors and ensure we maintain our goal for 99.999% uptime. Log Analysis is an integral aspect of maintaining our systems. Like most backend developers today, we have to sift through tons of log entries. Just for our CI pipelines alone, we currently store several billion rows of logs.

The steps we take to perform Log Analysis is no different than most developers:

- Normalize the data. We make sure all our log data is in a consistent format.

- Filter data to remove noise. We discard log entries that are more routine and retain information that could be more interesting in the event of an incident.

- Organize data into categories. We begin to classify and tag log entries so that it is easier to search data when an incident/event happens

Most Log Analysis tools are focused on indexing and searching, which is important when an event happens that requires investigation. There are a number of tools to choose from. Here are some of our favorite Log Analysis tools:

- The ELK stack (including ElasticSearch, OpenSearch & ChaosSearch)

- DataDog

- Graylog

- Logz.io

- Grafana Loki

Having one of these tools may be necessary, but on their own, they are not sufficient for today’s needs. Modern distributed systems emit relentless streams of log data. It is critical that you have analytics tools at your disposal to help you pinpoint where to look in your log data when an incident or event occurs.

Log Analytics: the best way to understand trends in your systems

Adding a Log Analytics solution to your operations will save you time and money. Instead of only being able to use a bottom-up approach sifting through logs, you’ll be able to layer in a top-down approach to guide your analysis. With Log Analytics, you’ll be able to ask more insightful questions, such as:

- Which functions cause the most errors?

- Has the overall rate of errors changed over time?

- Is a function producing more errors on average after a new release?

- Does a particular CPU architecture produce more errors?

- Is there a correlation between web referrers and errors?

For example, the following query gives you the error count per API over time. In your dashboard, you could plot this against the historical average error rate.

Now, instead of waiting for enough users to complain about things breaking, and then having to sift through the logs to identify the root cause, you can proactively monitor for, and alert on, increased error frequency.

Combining contextual information with logs to look for correlations is an even more powerful mechanism. Using this example, you can look at a combination of web requests and logs to see if errors on your APIs occur more frequently when traffic comes from certain referrers:

You can imagine building dashboards that segment your traffic between your mobile application and your website, and proactively identify errors when they occur with greater frequency.

In today’s world where business performance is tied to the performance of massive distributed systems, being proactive and gaining realtime insight can mean saving millions of dollars in lost brand affinity, sales, and/or downtime.

The Log Analytics Starter Kit

It doesn’t have to be painful, or take months, to build a Log Analytics solution that is totally custom to your software project. With the Log Analytics Starter Kit, you can get started today and see results immediately.

Developers love Tinybird because Tinybird makes it delightful to work with data at scale, even if you don’t have database skills or SQL mastery on your CV. This Starter Kit provides an end-to-end example of how to build custom Log Analytics for a Web Application project. Not building a Web App? Customize it!

You’re not going to waste any time choosing an ORM, API library, or web server. All of that comes right “out of the box.” Just build the insights that matter to you.

Why should you use it?

There are a few advantages to using the Tinybird Log Analytics Starter Kit to supplement your Log Analysis tool:

It’s totally language-agnostic

There’s no client libraries or custom data formats. The Log Analytics Starter Kit uses the Tinybird Events API, which is a standard HTTP endpoint for high-frequency log ingestion. Just send a POST request with your logs in JSON. Tinybird will do the rest.

It’s based on REST APIs

Tinybird lets you query your log data with SQL and instantly publish those queries as low-latency REST APIs. The Starter Kit includes some interesting metrics written in SQL that you can augment as needed.

The Starter Kit also comes with a Next.js dashboard that uses the lovely Tremor UI library. It’s super easy to adapt this dashboard to your project to show whatever crazy charts you need.

That said, you can just as easily ditch our dashboard and use your favorite observability tools. Grafana? No problem. Datadog? Easy peasy. Use the viz tool that works for you.

It’s completely customizable

The Starter Kit includes plenty of example metrics to get you started, but you can customize them to whatever extent you need, with nothing but simple SQL. And by the way, exploratory queries in the Tinybird UI are always free. Prototype your heart out.

It can be deployed in minutes

Despite being extensible and customizable from collection to visualization, the Log Analytics Starter Kit can be fully deployed in just a few minutes. And with Tinybird’s generous Build plan, you’ll have 10 GB of storage and 1,000 requests per day to your published endpoints for free.

How do you deploy it?

You can deploy the Tinybird Log Analytics Starter Kit right now. Here are a few ways to do it:

From the GitHub repository

Navigate to the GitHub repository to get started. The README can guide you through how to deploy the Tinybird data project and the Next.js dashboard.

You can deploy the data project to Tinybird directly, or clone the repo and set up your workspace using the Tinybird CLI. Then, deploy the dashboard to Vercel, or just use our hosted dashboard.

From the Starter Kit page

For a more guided walkthrough, check out the Log Analytics Starter Kit webpage, which offers a tutorial video and a handful of simple steps to get you up and running.

Want to learn more? Join us for a live stream!

Don’t forget, if you want to learn even more about logging, log analysis, and log analytics, you can join our Developer Advocates for a Live Coding Stream on building Log Analytics using Tinybird on March 1, 2023 at 1700 GMT / 12pm EST / 9am PST.

Need more free? Use this code.

As I mentioned, you can start for free. But if you start bumping up against that 10 GB limit or you’ve got an analytics-hungry team hammering your dashboard, then we’d like to take a load off for you.

Use the code MORE_LOGS_MORE_POWER for $150 towards a Tinybird Pro plan subscription.

As always, if you have any questions, you can join us in our community Slack.