A practical guide to real-time CDC with MongoDB

A step-by-step guide to setting up Change Data Capture (CDC) with MongoDB Atlas, Confluent Cloud, and Tinybird.

Change Data Capture (CDC) is a design pattern that allows you to track and capture change streams from a source data system so that downstream systems can efficiently process these changes. In contrast to Extract, Transform, and Load (ETL) workflows, it can be used to update data in real-time or near real-time between databases, data warehouses, and other systems. The changes include inserts, updates, and deletes, and are captured and delivered to the target systems without requiring the source database to be queried directly.

In this post, you'll learn how to implement a real-time change data capture pipeline on change data in MongoDB.

In this blog post, I'll describe how to implement a real-time change data capture (CDC) pipeline on changes in MongoDB, using both Confluent and Tinybird.

How to work with MongoDB change streams in real-time

In this tutorial, I’ll be using Confluent to capture change streams from MongoDB, and Tinybird to analyze MongoDB change streams using it’s native Confluent Connector. Why Tinybird? For real-time analytics on change data, Tinybird serves as an ideal data sink.

This is an alternative approach to using Debezium and Debezium’s MongoDB Connector, a popular open source framework for change data capture. It is a perfectly viable solution thanks to its MongoDB CDC connector, but this guide provides an alternative for real-time analytics use cases.

Tinybird is the perfect data sink when you want to run real-time analytics over change data in MongoDB.

With Tinybird, you can transform, aggregate, and filter MongoDB changes as they happen and expose them via high-concurrency, low-latency APIs. Using Tinybird with your CDC data offers several benefits:

- Real-Time Analytics: Tinybird processes MongoDB's oplog to provide real-time analytics on data changes.

- Data Transformation and Aggregation: With SQL Pipes, Tinybird enables real-time shaping and aggregation of the incoming MongoDB change data, a critical feature for handling complex data scenarios.

- High-Concurrency, Low-Latency APIs: Tinybird empowers you to publish your data transformations as APIs that can manage high concurrency with minimal latency, essential for real-time data interaction.

- Operational Intelligence: Real-time data processing allows you to gain operational intelligence, enabling proactive decision-making and immediate response to changing conditions in your applications or services.

- Event-Driven Architecture Support: Tinybird's processing of the oplog data facilitates the creation of event-driven architectures, where MongoDB database changes can trigger business processes or workflows.

- Efficient Data Integration: Rather than batching updates at regular intervals, Tinybird processes and exposes changes as they occur, facilitating downstream system synchronization with the latest data.

- Scalability: Tinybird's ability to handle large data volumes and high query loads ensures that it can scale with your application, enabling the maintenance of real-time analytics even as data volume grows.

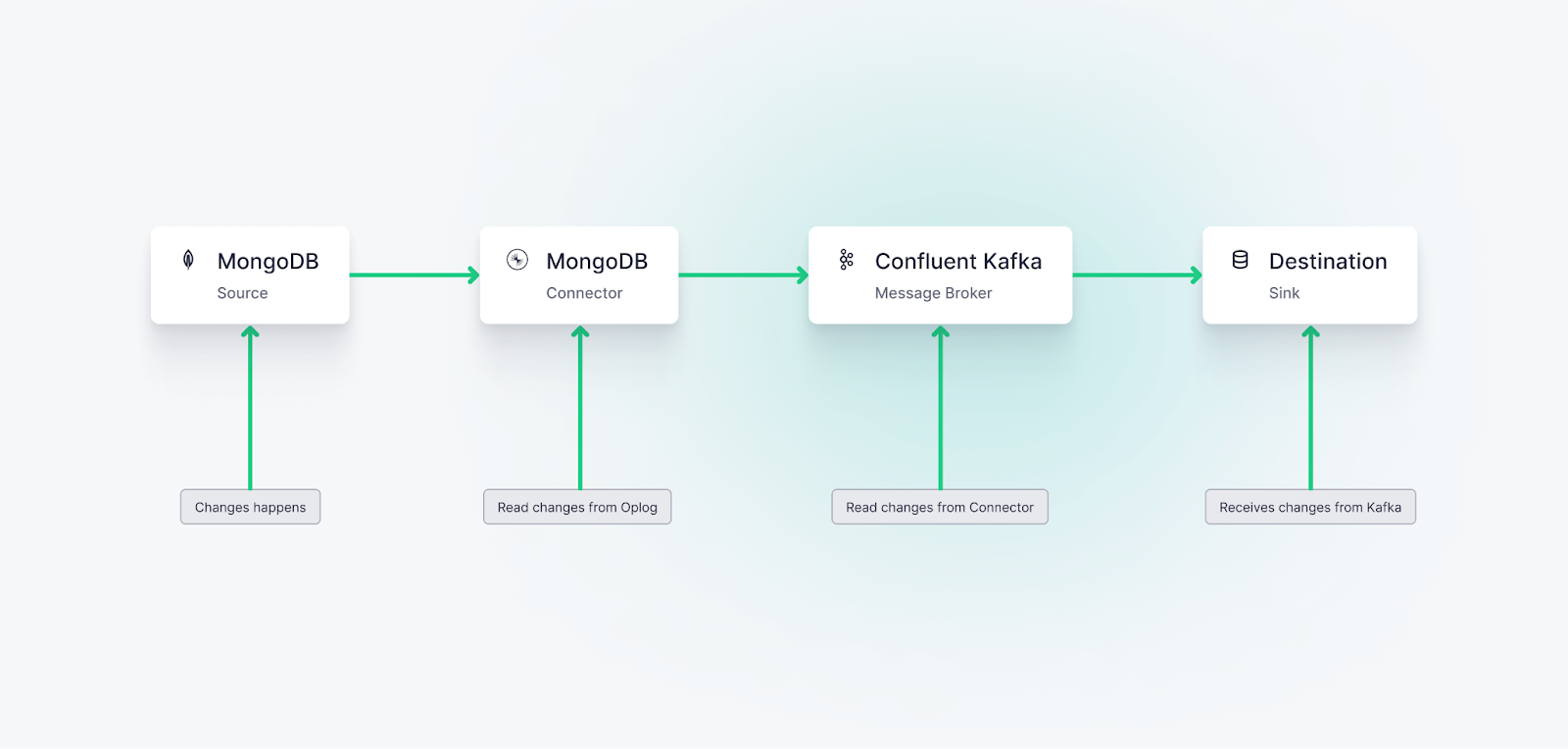

How does MongoDB CDC work?

CDC with MongoDB works primarily through the oplog, a special capped collection in MongoDB that logs all operations modifying the data stored in your databases.

When a change event such as an insert, update, or delete occurs in your MongoDB instance, the change is recorded in the oplog. This log is part of MongoDB's built-in replication mechanism and it maintains a rolling record of all data-manipulating operations.

Changes in MongoDB are recorded in its oplog, a built-in replication mechanism offered by MongoDB.

CDC processes monitor this oplog, capturing the changes as they occur. These changes can then be propagated to other systems or databases, ensuring they have near real-time updates of the data.

In the context of MongoDB Atlas and a service like Confluent Kafka, MongoDB Atlas runs as a replica set and is configured to generate an oplog. A connector (like the MongoDB Source Connector) is then used to pull the changes from MongoDB's oplog and stream these changes to Kafka topics. From there, these changes can be further processed or streamed to other downstream systems as per your application requirements.

How to set up CDC with MongoDB, Confluent Connect, and Tinybird

Let's create a CDC pipeline using MongoDB Atlas and Confluent Cloud.

Step 1: Configure MongoDB Atlas

- Create an account with MongoDB Atlas, and create your MongoDB database cluster in MongoDB Atlas if you don't have one yet.

- Ensure that your cluster runs as a replica set if you are running MongoDB locally. MongoDB Atlas clusters are replica sets by default, so if you create a cluster with Atlas, you shouldn’t have to do any extra configuration.

- If you are running MongoDB locally, check that MongoDB Atlas generates oplogs. The oplog (operations log) is a special capped collection that keeps a rolling record of metadata describing all operations that modify the data stored in your databases. MongoDB Atlas does this by default, so again, no extra configuration should be required.

Step 2: Setup Confluent Cloud

- Sign up for a Confluent Cloud account if you haven't done so already.

- Create a new environment and then create a new Kafka cluster within that environment.

- Take note of your Cluster ID, API Key, and API Secret. You'll need these later to configure your source and sink connectors.

Step 3: Configure MongoDB Connector for Confluent Cloud

- Install the Confluent Cloud CLI. Instructions for this can be found in the Confluent Cloud documentation.

- Authenticate the Confluent Cloud CLI with your Confluent Cloud account:

- Use your Confluent Cloud environment and Kafka cluster, you can find out here to do that in the Confluent Docs.

- Create the MongoDB source connector with the Confluent CLI.

- You'll need your MongoDB Atlas connection string for this. Note that you need to replace

$API_KEY,$API_SECRET,$SR_ARN,$BOOTSTRAP_SERVER,$CONNECTION_URI,$DB_NAME,$COLLECTION, and$TOPIC_PREFIXwith your actual values:

Step 4: Set up Tinybird

- Sign up for a free Tinybird account.

- Create a Tinybird Workspace.

- Copy your Tinybird Auth Token so you can connect Tinybird to Confluent.

Step 5: Connect Confluent Cloud to Tinybird

Tinybird’s Confluent Connector allows you to ingest data from your existing Confluent Cloud cluster and load it into Tinybird.

The Confluent Connector is fully managed and requires no additional tooling. Connect Tinybird to your Confluent Cloud cluster, choose a topic, and Tinybird will automatically begin consuming messages from Confluent Cloud.

To connect your Confluent Cloud cluster to Tinybird and start ingesting data, you will need to set up the Confluent Connector in Tinybird.

Before you start, make sure you've set up the Tinybird CLI.

After authenticating to your Tinybird Workspace, use the following command to create a connection to your Confluent cluster.

Follow the prompts to finish setting up your connection.

After creating the connection to Confluent, you’ll set up a Data Source to sink your change data streams from MongoDB. When managing your Tinybird resources in files, you will configure the Confluent Connector settings in a .datasource file. Here are the settings you need to configure:

KAFKA_CONNECTION_NAME: The name of the configured Confluent Cloud connection in TinybirdKAFKA_TOPIC: The name of the Kafka topic to consume fromKAFKA_GROUP_ID: The Kafka Consumer Group ID to use when consuming from Confluent Cloud

Here's an example of how to define a Data Source with a new Confluent Cloud connection in a .datasource file. The schema matches the cdc events you are ingesting from MongoDB and defines their data types in Tinybird. Note the importance of setting a primary key, most often the timestamp of your CDC events. You can find more detailed instructions in the Tinybird docs.

Step 6. Start building real-time analytics with Tinybird

Now your CDC data pipeline should be up and running, capturing changes from your MongoDB Atlas database, streaming them into Kafka on Confluent Cloud, and then sinking them into a real-time, analytical datastore on Tinybird’s real-time data platform.

You can now query, shape, join, and enrich your MongoDB CDC data with SQL Pipes and instantly publish your transformations as high-concurrency, low-latency APIs to power your next use case.

Wrap Up:

Change Data Capture (CDC) is a powerful pattern that captures data changes and propagate them in real-time or near real-time between various systems. Using MongoDB as the source, changes are captured through its operations log (oplog) and propagated to systems like Confluent Kafka and Tinybird using a connector.

This setup enhances real-time data processing, reduces load on the source system, and maintains data consistency across platforms, making it vital for modern data-driven applications. The post walked through the steps of setting up a CDC pipeline using MongoDB Atlas, Confluent Cloud, and Tinybird, providing a scalable solution for handling data changes and powering real-time analytics.

Resources:

- MongoDB Atlas Documentation: A comprehensive guide on how to use and configure MongoDB Atlas, including how to set up clusters.

- Confluent Cloud Documentation: Detailed information on using and setting up Confluent Cloud, including setting up Kafka clusters and connectors.

- MongoDB Connector for Apache Kafka: The official page for the MongoDB Connector on the Confluent Hub. Provides in-depth documentation on its usage and configuration.

- Tinybird Documentation: A guide on using Tinybird, which provides tools for building real-time analytics APIs.

- Change Data Capture (CDC) Overview: A high-level overview of CDC on Wikipedia, providing a good starting point for understanding the concept.

- Apache Kafka: A Distributed Streaming System: Detailed information about Apache Kafka, a distributed streaming system that's integral to the CDC pipeline discussed in this post.

FAQs

- What is Change Data Capture (CDC)? CDC is a design pattern that captures changes in data so that downstream systems can process these changes in real-time or near real-time. Changes include inserts, updates, and deletes.

- Why is CDC useful? CDC provides several advantages such as enabling real-time data processing, reducing load on source systems, maintaining data consistency across platforms, aiding in data warehousing, supporting audit trails and compliance, and serving as a foundation for event-driven architectures.

- How does CDC with MongoDB work? MongoDB uses an oplog (operations log) to record data manipulations like inserts, updates, and deletes. CDC processes monitor this oplog and capture the changes, which can then be propagated to other systems or databases.

- What is MongoDB Atlas? MongoDB Atlas is a fully managed cloud database service provided by MongoDB. It takes care of the complexities of deploying, managing, and healing your deployments on the cloud service provider of your choice.

- What is Confluent Cloud? Confluent Cloud is a fully managed, event streaming platform powered by Apache Kafka. It provides a serverless experience with elastic scalability and delivers industry-leading, real-time event streaming capabilities with Apache Kafka as-a-service.

- What is Tinybird? Tinybird is a real-time data platform that helps developers and data teams ingest, transform, and expose real-time datasets through APIs at any scale.

- Can I use CDC with other databases besides MongoDB? Yes, CDC can be used with various databases that support this mechanism such as PostgreSQL, MySQL, SQL Server, Oracle Database, and more. The specifics of implementation and configuration may differ based on the database system.

- How secure is data during the CDC process? The security of data during the CDC process depends on the tools and protocols in place. By using secure connections, authenticated sessions, and data encryption, data can be securely transmitted between systems. Both MongoDB Atlas and Confluent Cloud provide various security features to ensure the safety of your data.