How Typeform Built a Fully Functional User Dash With Tinybird

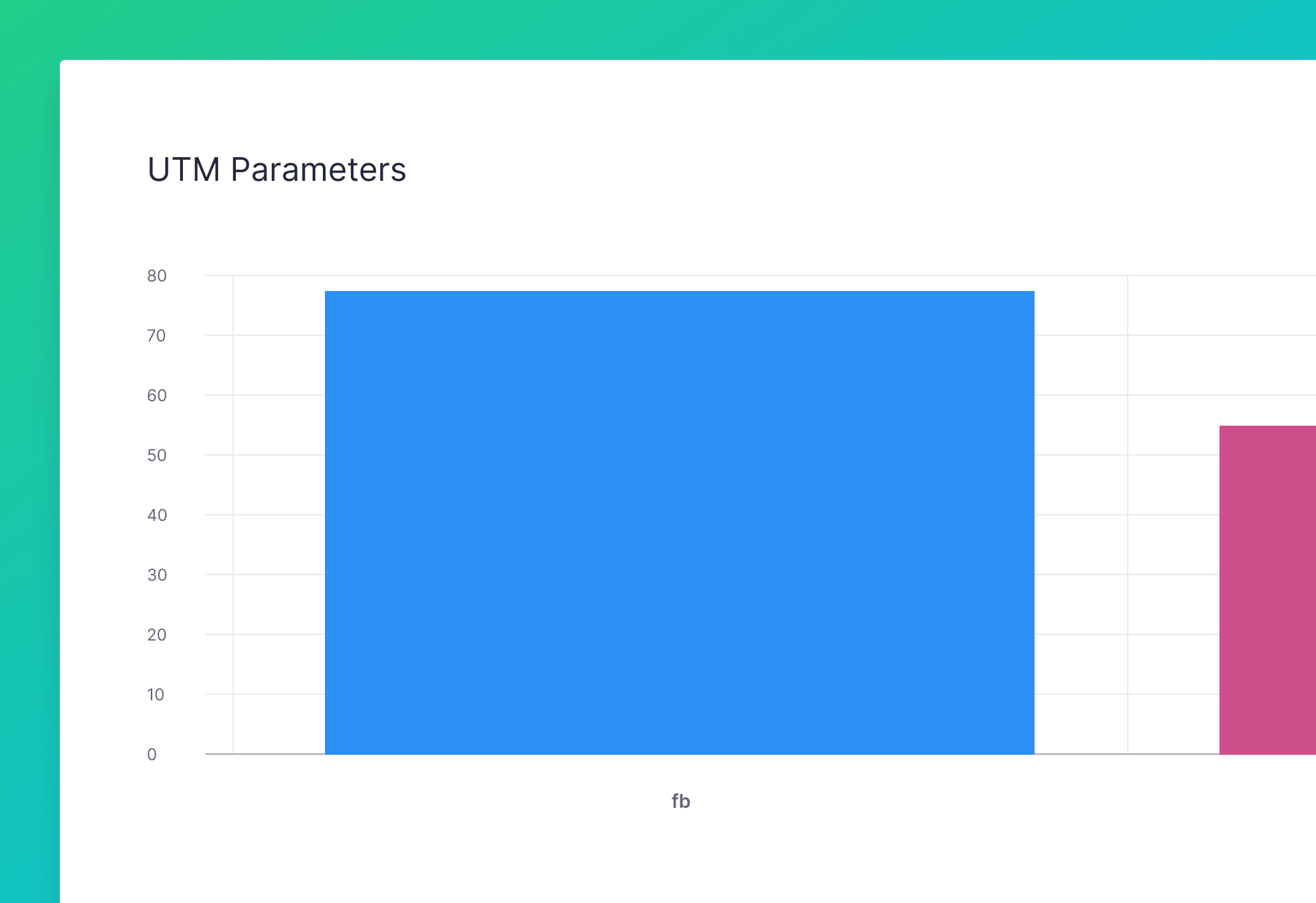

Creating an API that groups different UTM parameters from Typeform URLs

Typeform helps brands create no-code forms, quizzes, surveys, and asynchronous videos to engage their audience and grow their business with ease. We drive more than 500 million interactions every year, all around the world.

Each quarter, we hold a hackathon where the Typeform team has complete freedom to develop new features around our core product. This is the story of how we used Tinybird to add realtime tracking of UTM parameters present in a Typeform URL.

Grouping different UTM parameters with form metrics

Typeform customers typically share their forms, quizzes, surveys across multiple platforms, including Facebook, Instagram, and more. To encourage a higher response rate, customers often share them multiple times on these platforms.

By default, there’s a UTM parameter built-in to each Typeform builder that collects data from each form submitted. This helps customers keep track of submissions which are then surfaced in their admin dashboard. The dashboard is realtime so customers can spot developing trends and can quickly take action to correct any issues or capitalize on developing opportunities. But the challenge is that our customers currently can’t see other valuable related data, like visits, completion time and conversion rate in their dashboard.

Here are just a few examples of some typical use cases we’ve seen:

- A customer sees that Facebook is the source with the most submission but they want to know which is the best performing campaign (completion rate) on Facebook.

- A customer wants to know which campaign has a higher completion rate. And, within that campaign, which source has a higher completion rate.

- A customer wants to know how many submissions they got from an email (medium) on their Facebook and Instagram channels (source).

For the hackathon, our team wanted to create an API with the ability to group different UTM parameters, including source, campaign, medium, content and term, along with the basic metrics of a form: views, starts, submissions, completion rate and average time to complete.

This data exists already, but it lives in silos. Our goal was to find a way to read a lot of events, each with its own schema, and generate a richAPI for grouping and filtering in real time.

Three million events in 12 hours is no hassle

In any hackathon, it’s all about speed - finding and implementing a solution as quickly as possible. Our approach didn’t differ in this hackathon. We chose Tinybird as our analytical backend because it is serverless. We didn’t want to deploy and maintain our own database. Additionally, the other solutions we considered would have taken far too long to implement.

Although Tinybird offers multiple ways to ingest data, we decided to use their /events endpoint. We weren’t able to use Tinybird’s Kafka connector directly due to our internal security policies, so we created a Go service that consumed events from our topics and pushed them to Tinybird. On the first day, we started sending event data from several topics — forms viewed, submissions started, and submissions completed — to https://api.tinybird.co/events. We also coded a small internal API to consume the Tinybird generated API, so we could feed the data to our frontend.

We were able to start using Tinybird with almost no configuration. We set up the different Data Sources, and started sending different events. When the data started coming in, we created a Tinybird pipe and generated an API endpoint. Here’s our pipe.

Next, we discovered that a query was scanning too much data, so we created a materialized view through the CLI to reduce the amount of data scanned on query time and preprocess on ingestion.

But materialized views are tricky, especially if they get called many times, so Tinybird’s team —Jordi Villar in our case— proposed this optimization: snapshot. This reduced the processed bytes and query time from ~220MB and ~5s, to ~1MB and ~4.5ms, an exponential improvement. Whenever new data arrives to the submission_finish Data Source, that query is executed and the result is materialized into the Data Source the endpoints query, so the performance improvement benefits both ingestion and consumption. This was key for our use case.

We were now able to feed our dashboard from the form_submission materialized view.

We now had a few forms sending data to Tinybird. We had noticed how fast Tinybird could process events, so we decided to go even further and send more events from even more forms to Tinybird. In total, we sent 3 million events in 12 hours using Tinybird’s High Frequency Ingestion endpoint. We didn’t even have to warn them. The entire time, P99 latency stayed beneath 100ms.

Lastly, we integrated with our frontend to test user experience and how you can play with the filters with almost no latency, as you can see at the top of this post.

And that’s it. We managed to build new and valuable functionality over the course of a hackathon. As a developer, what I liked about the experience and the service was that it took next to zero configuration to get started, the API Docs were clear, and the way in which you can split large queries into smaller steps (nodes).